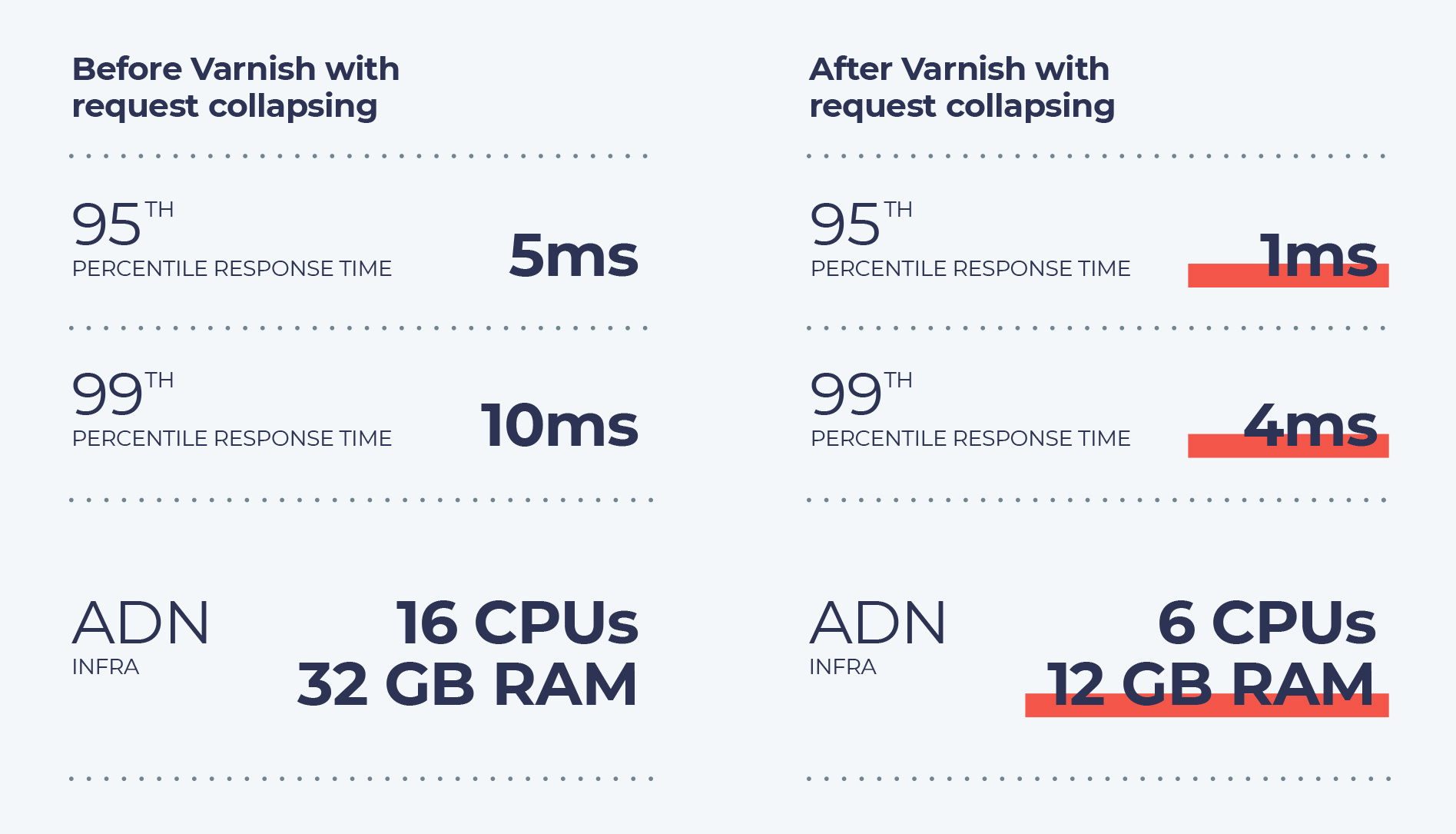

Our ADN (API Delivery Network) serves tremendous amount of traffic per minute. At peak hours, it crosses well over 200K+ rpm, yet responding in less than 2ms.

This wasn’t always the case though. The average response time was about 10ms, even after having Elixir and Redis as the technologies behind ADN. Even though 10ms is really fast for API responses, it meant that we needed more processing power to handle 200K rpm.

We didn’t quite like the idea of adding more servers to handle the scale. After investigating for a week, we realised that a lot of similar requests were coming to the ADN. We were using in-memory mechanism, but the server was still coming under quite a bit of stress.

For us, data changes really fast, every second. Any kind of caching simply cannot exceed 60 seconds.

We decided to put Varnish in front of our Elixir application and configured its request collapsing feature with a TTL of just 5 seconds.

And voila! The load on the server was down to 50K rpm with varnish serving 75% of the traffic. Suddenly, the same servers were capable of handling 4 times the load.

Picking the right tool for the problem at hand dramatically changed the resource requirements for us, allowing us scale while still reducing the server requirement.

ENJOY CRACKING TOUGH TECHNICAL PROBLEMS?

JOIN US